By Ajat Prabha

Courier is the superhighway between mobile devices and our backend servers. It’s a persisting connection through which we’re able to push content from our server to the app. In Part-1 of this blog, we talked about why we needed a message broker that can give us an ever-connected channel to our clients in the first place and why we decided to use the MQTT protocol as our solution.

Here’s the first part:

Since MQTT is a protocol, there are many implementations out there that satisfy the protocol. But there were a few constraints:

- It has to be an open-source broker

- It has to be performant

- It has to be highly-available

- It must have great persistence capabilities

Considering the above requirements in mind, we considered a few options: Apache ActiveMQ, emitter, EMQX, HiveMQ, mosquitto, and VerneMQ.

We later dropped EMQX and HiveMQ as their open-source versions were quite limiting and we were not ready to go for an enterprise solution as this was still experimental. Emitter does not completely adhere to the MQTT protocol, so we dropped that as an option as well. ActiveMQ on the other hand does support MQTT but it is not the primary protocol it was designed for so we did face some hiccups down the line.

Benchmarks

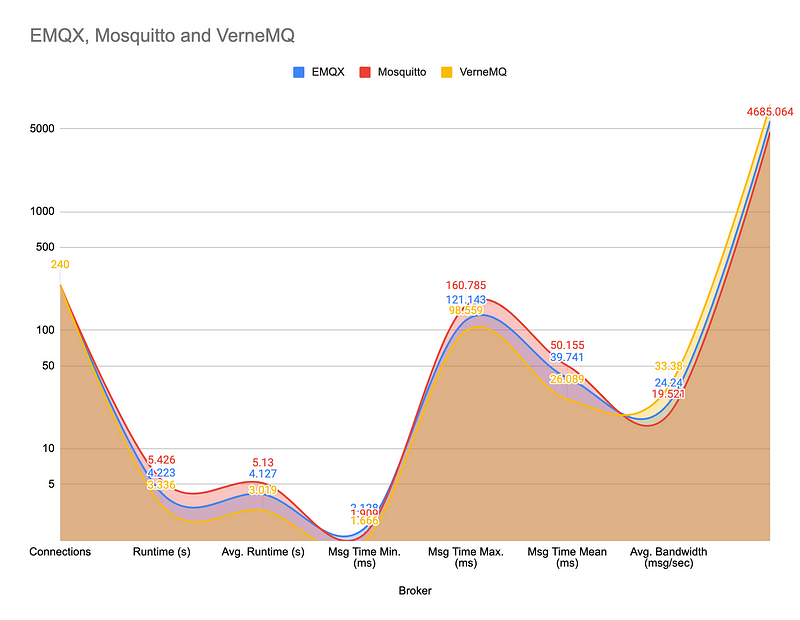

MQTT is a very lightweight protocol as it was initially designed for IoT devices and they are low on resources and power. So most of the brokers that we tested were quite performant in their operations.

In our initial testing, we used a simple tool called mqtt-benchmark to generate some stats around the performance, and here is a comparison between the three options.

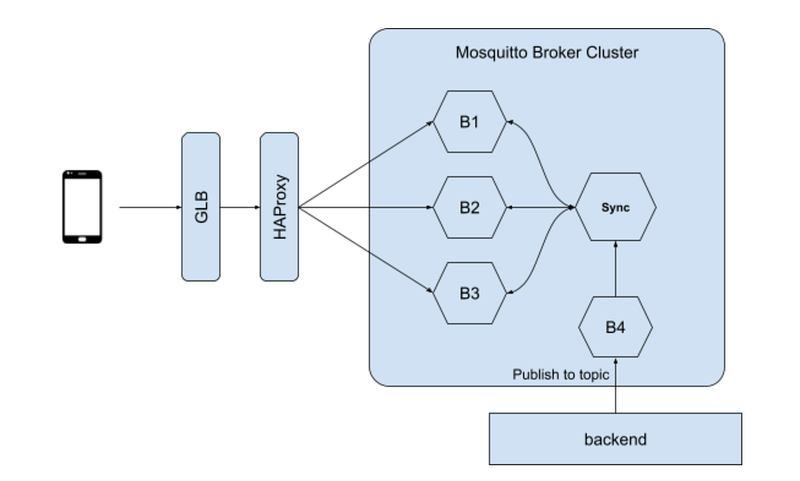

So our choice was narrowed down to Mosquitto and VerneMQ now. However, Mosquitto has no native support for clustering; there is a hacky way to do it, but it still has a single point of failure. The idea is to use one broker instance as a synchronisation broker.

Enter VerneMQ

VerneMQ describes itself as a broker which is capable of clustering MQTT for high availability and scalability.

It is written in Erlang and Erlang is known for its efficiency and ability to build massively scalable soft real-time systems with requirements on high availability. It is used in telecom, banking, etc. Erlang’s runtime system has built-in support for concurrency, distribution, and fault tolerance.

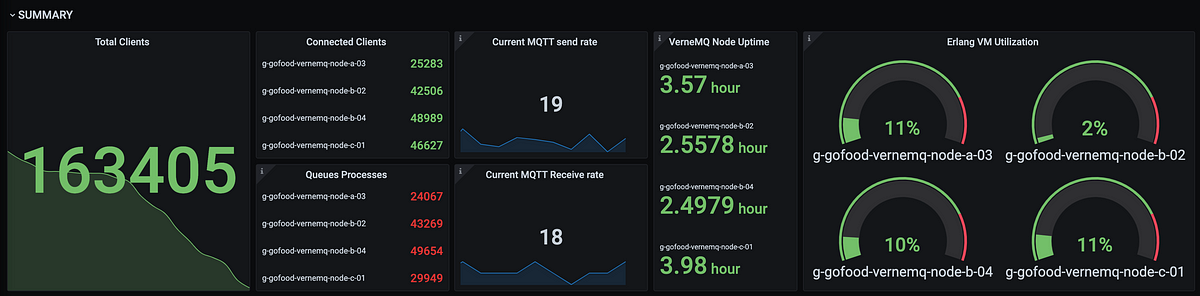

This time, we wrote some pseudo-code to simulate production-like traffic patterns and our goal was to achieve 200K active clients with a cluster of 4 GCP’s n1-standard-2 VMs which are 2 vCPU and 7.5GB RAM machines.

Our setup

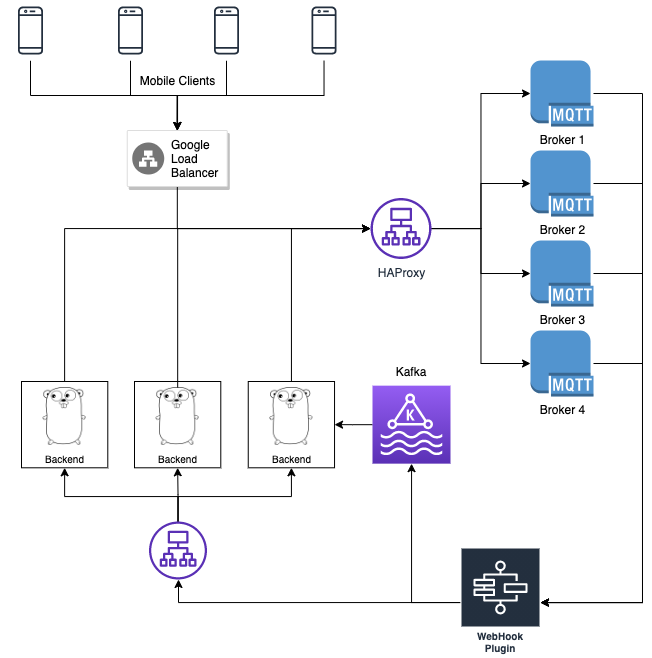

We use a highly available deployment of VerneMQ brokers clustered together. The traffic is load-balanced by a set of HAProxy instances which pass on the connection as an opaque TCP connection to the broker.

Mobile clients connect through the Google Cloud Load Balancer, which injects ProxyProtocol to the TCP connection, it is then passed on to HAProxy.

Backends connect directly via HAProxy, which injects ProxyProtocol only for backend connections. Our broker is configured to decode ProxyProtocol wrapped packets before performing any other logic.

WebHook plugin can relay events to Backends in sync or an async manner.

More load testing

We did initial benchmarks for multiple brokers and pushed VerneMQ to its limits. The initial tests were designed to analyse how many connections it can handle, and we easily got up to 160K+.

We have also run some tests with well over 200 msg/sec and 300K connected clients as well. The outcome of the test heavily relies on the use-case, so we’re currently working on a declarative load testing solution. More on that in a subsequent blog post.

Other Features

Webhook Plugin

VerneMQ has a ton of good features that make it quite extensible and adaptable to your use case. One such feature is the Plugin Development support. One can either write Erlang-based plugins or can use the out-of-the-box HTTP-based webhook plugin.

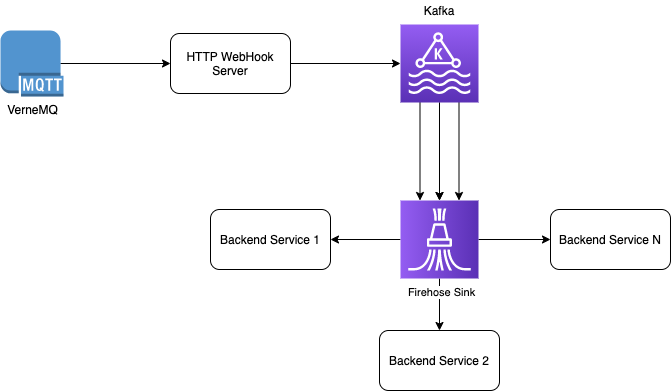

We have created a data pipeline using our in-house data solutions. It looks like this:

This makes it super easy for backend applications to react to any event inside the VerneMQ cluster.

We use this flow to check end-to-end message delivery:

- We publish a message

- The message is relayed to mobile

- Mobile sends an ACK

- VerneMQ calls WebHook Server which puts the event on Kafka

- Firehose consumes this event and informs Backend Service that message has been ACKed

- If the ACK event is not received within N seconds, we send a high priority FCM Push Notification to the mobile which takes wake lock and reconnects to Courier

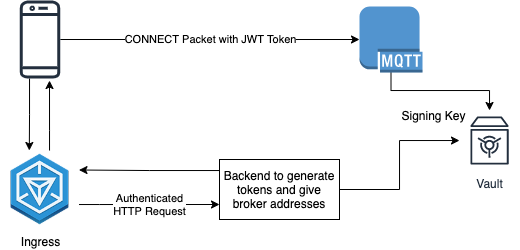

Authentication Plugin

VerneMQ has very good extensibility and it comes with many authentication mechanisms out-of-the-box. We wanted a simple yet easily controllable solution for authentication, so we created a simple plugin written in Erlang which can simply verify the identity from a JWT token.

Watch this space for more updates on the Android and iOS client libraries and how we made improvements to problems we faced in them.

Also, do watch the talk by folks over at Bose during KubeCon NA 2018, they have scaled VerneMQ to 5 Million concurrent connections!

Find more stories from our vault, here.

Also, we’re hiring! Check out open job positions by clicking below: