By Rohan Lekhwani

Gojek serves millions of customers by connecting them to over 2.6 million driver partners across hundreds of cities in Southeast Asia.

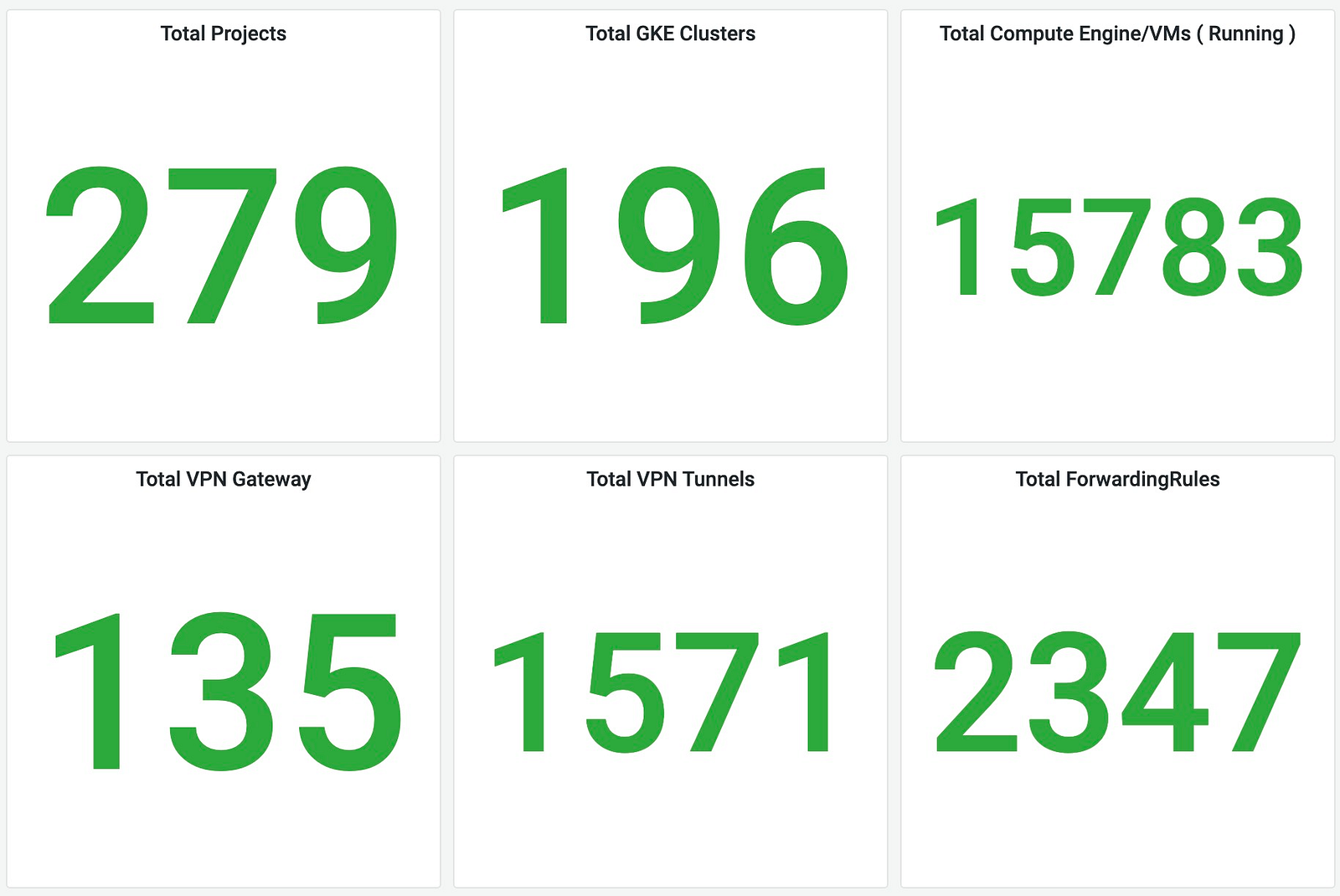

The cloud infrastructure to manage such a load is correspondingly huge and Google Cloud Platform has played an integral role. At any, time more than 15000 VMs are running across 250+ projects.

How can such a huge infrastructure be managed effectively? The answer — Infrastructure as Code (IaC).

IaC allows you to opt for a declarative mechanism of provisioning infrastructure, all while maintaining consistency and accountability in your organization.

While previously we have tried using IaC to solve our infrastructure problems with Olympus, it lacked the balance between allowing users the freedom to manage their infrastructure while still enforcing code standardization at one place.

Introducing Skynet

Using Skynet, product groups (PDGs) across Gojek can provision and manage their infrastructure all by themselves. Here’s a textbook definition:

Skynet is a highly opinionated and curated set of IaC, designed to act as a monorepo across multiple providers and environments. It aims to provide a single source of truth of the “as implemented” resources and configuration.

Architecture

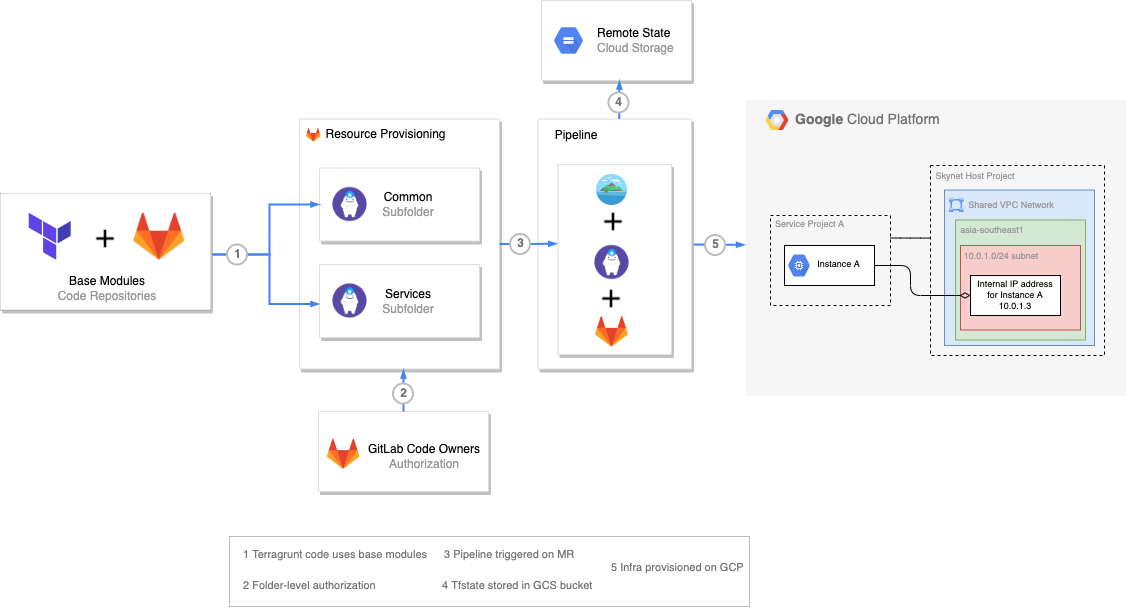

Within GCP Skynet follows a shared VPC architecture where a host project for each environment hosts multiple service projects on its network. This architecture is mirrored within the Skynet repository on GitLab through a monorepo code structure.

Skynet is composed of three major components — Base Modules, Resource Provisioning and Atlantis CI.

Base Modules

Modules form the core of re-usable Terraform code that configure how infrastructure should be deployed. We have more than 40 CFT-based terraform modules for most of the commonly provisioned resources including folders, projects, networks, project-IAM and service accounts.

Internally, the modules ensure that a standard naming convention is followed for all resources while providing complete configuration flexibility. The modules have also been integrated with internal tools like Fortknox — our secret management tool and Cerebro — our IPAM and DCIM tool which makes them easy to use without sacrificing over the perks of internal tooling.

Each base-module is maintained as a version-controlled repository in a tf-modules GitLab group.

tf-modules (Gitlab Subgroup for grouping)

|- gcp_folder (Git repo for GCP Folder base module)

|- gcp_project (Git repo for GCP Project base module)

|- gcp_network (Git repo for GCP Network base module)

|…Resource Provisioning

We make use of Terragrunt, which is a wrapper over Terraform. Using built-in functions and blocks like include and dependency allow us to keep our code completely DRY. Here’s how you would provision a network in Skynet:

dependency "project" {

config_path = "${get_parent_terragrunt_dir()}/gcp/gojek_org/common/project/gojek/skynet"

}

include {

path = find_in_parent_folders()

}

terraform {

source = "<path>/tf-modules/gcp_network.git?ref=v1.6.4"

}

inputs = {

project_id = dependency.project.outputs.project_id

shared_vpc_host = true

seq_num = "01"

description = "This is a global VPC for project."

env = "p"

realm = "skynet"

}Skynet aims to be a monorepo for all infrastructure provisioned at Gojek. Consequently, the repository structure should allow ownership by Product-Groups (PDGs) over their infrastructure and standards for us maintainers. How do we achieve this?

skynet (Git Repository)

|- gcp

| |- terragrunt.hcl (GCP root level Terragrunt configuration)

| |- gojek_org

| | |- org_vars.hcl (Shared variables for gojek_org as tfvars/hcl)

| | |- common (Folder for centrally managed infrastructure)

| | | |- folders, projects, networks, iam, etc

| | |- services (Folder for PDG managed service infrastructure)

| | | |- clusters, vms, MIGs, instance templates, etc

| |- other_orgs

|- other_terraform_resources (NewRelic, etc)The common folder contains maintainer-managed infrastructure that Product-Groups do not have access to. The servicesfolder contains mutually-isolated per PDG sub-folders where each PDG commits code for their own infrastructure while making use of the centrally managed infrastructure code in the common folder using Terragrunt dependencies.

The isolation between PDG level folders is maintained using Code Owners — a GitLab Enterprise feature. This allows PDGs to decide who can approve merge requests to sub-folders where code for their infrastructure is maintained.

Atlantis CI

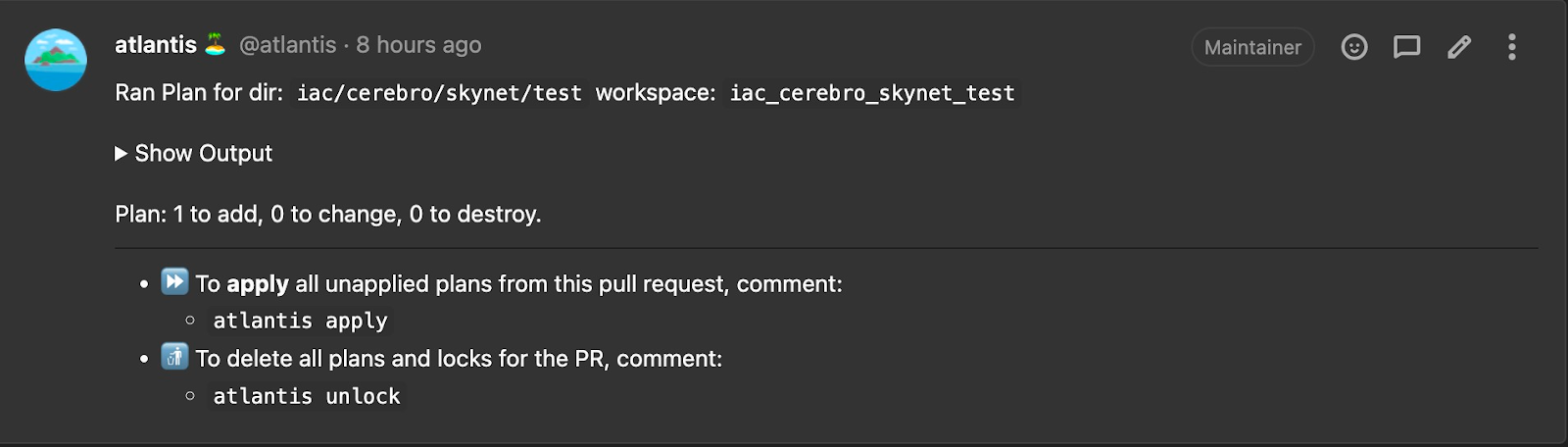

Our previous attempts to maintain IaC had one major drawback — users had to wait for multiple, unrelated pipelines to succeed before they could apply their Terraform plans.

How do we enable change-specific plan and apply and also let PDGs have control over who gets to deploy their infrastructure directly on GitLab?

Enter Atlantis.

Atlantis is a CI tool that comments plan and apply outputs directly on merge requests. We make use of custom workflowsto run Terragrunt plan and apply on the MRs. Using terragrunt-atlantis-config allows us to create a dependency graph to generate a repo-level atlantis.yaml. Other configurations include requiring merge approval before apply, automerging and deleting the branch post-apply.

Our Atlantis server URL is exposed via an internal load balancer backed by a managed instance group. We make use of a modified version of this Chef cookbook to configure Atlantis at VM startup. The instance template used with the MIG works on a machine image that has custom terraform plugins for internal tools pre-installed. Webhook secrets and GitLab tokens are fetched using our internal secret manager tool directly into the server.

Restricting Access

While access to PDG specific folders in the Skynet repository can be controlled with GitLab Code Owners, a bigger challenge is how do we prevent users from not making a mess using Google Cloud Console?

A simple rule is to not allow any user direct editor access through the console. All operations on infrastructure occur through service account impersonations.

When creating a project we create two service accounts:

atlantis-local: This service account is assigned admin roles over the project created and is impersonated by Atlantis for provisioning infrastructure within the project.project-admin: This service account is used for break glass privilege access elevation.

During project creation we also take two Google groups as inputs:

project_admins_group: Group of human users to impersonate thetenant-adminservice account during break-glass situations.project_users_group: Group of human users with additive viewer privileges on the infrastructure within the project.

A service account with no roles is created and attached to the instance template used to deploy Atlantis. This service account is given roles/iam.serviceAccountTokenCreator over the per-project atlantis-local service account thereby allowing resource creation within the project via service account impersonation.

Organization policies have been set in place to disable service account key creation and upload.

And Wrap Up

Skynet aims to be the single-source of truth for infrastructure deployment at Gojek. The main win for Skynet is allowing users sufficient flexibility and ownership while still maintaining standards throughout their code base.

Skynet ensures this by defining strict organization policies, selective IAM permissions, and enforcing an impersonation-first workflow to provision infrastructure resources.

Huge credits to the people whose invaluable efforts shaped Skynet to what it resembles today: Aaron Yang, Annamraju Kranthi, Maitreya Mulchandani, Mark Statham, Mrityunjoy Chattopadhyay, Nikhil Chaudhari, Nikhil Makhijani, Pardeep Bhatt and Ram Sharma.

Find more stories from our vault, here.

Check out open job positions by clicking below: